|  |

Note, the cost of a merge sort (in terms of array assignments) can be broken into two parts:

With this in mind, if we assume:

The above clearly defines $C(n)$, but does so in a recursive way. As a result, it may not be obvious how this cost function compares to those for other sorting methods. As it happens, $C(n)$ is $O(n \ln n)$. We can prove this in a variety of ways -- and looking at multiple proofs of this result will give us a nice survey of some useful techniques for dealing with recursive cost functions. With this in mind, we'll consider 4 different proofs below.

Note that for each proof, we make a simplifying assumption that $n=2^m$ for some non-negative integer $m$, so that the array and all of the sub-arrays considered always break evenly into two equal length halves. This simplifies the above recursive relationship to what is shown below.

$$C(n) = 2 C(n/2) + n \quad \textrm{ for } n \gt 1, \textrm{ with } C(1) = 0$$Importantly, note that $C(n)$ -- which is in terms of array assignments -- also then serves as an upper bound on the cost in terms of comparisons.

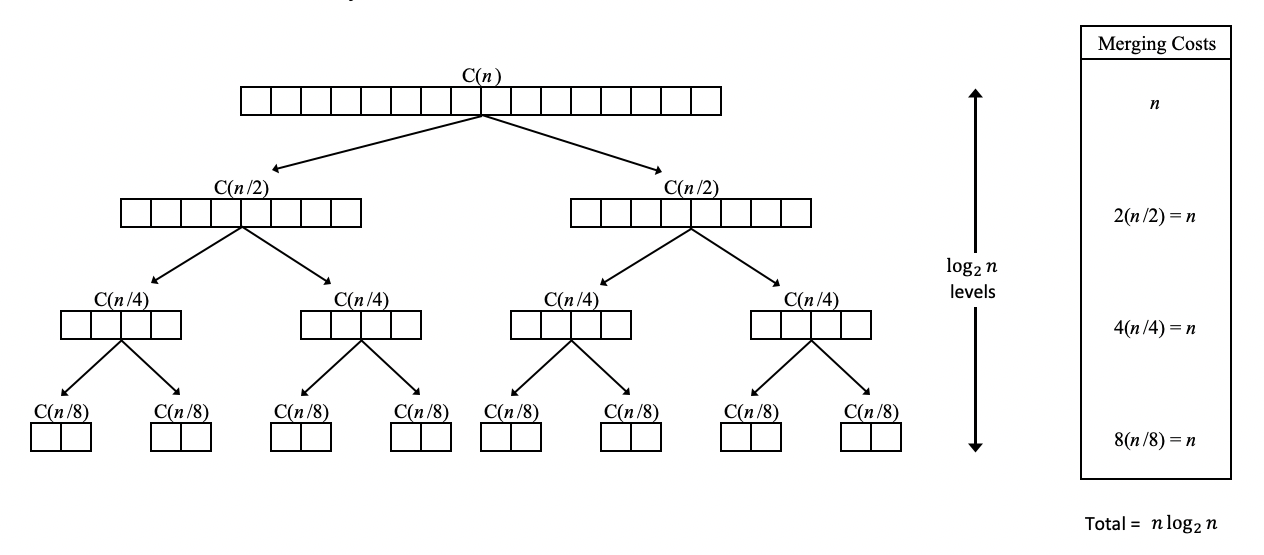

To find the cost function $C(n)$, consider what happens when one splits a given array (or sub-array) into two halves, sorts them, and then merges the sorted halves back together again. One will ultimately need $n$ array assignments to accomplish the final merge. The computation of the rest of the cost now requires we look at sorting the two halves.

Each half is now $n/2$ long and will each require $n/2$ array assignments to merge back together once they themselves are broken in half and their halves sorted. This is a total of $2(n/2) = n$ array assignments. This happens again and again, each time picking up $4(n/4) = n$ array assignments, and then $8(n/8) = n$ array assignments, etc., until no more merges are necessary -- as the tree below suggests. (Hint: to see why we refer to this picture as a "tree", look at it upside-down.)

As can be seen, each level then contributes a total of $n$ array assignments. If the tree had $k$ levels, then the total cost would be $kn$. So the question is "How many levels should we have for a $n=2^m$ element array?" Of course, we can only divide $n=2^m$ in half a maximum of $m$ times, suggesting that the number of levels is $m = \log_2 n$.

Thus, the total cost is given by $C(n) = n \log_2 n$

QED.

We can also use the recursive relationship $$C(n) = 2 C(n/2) + n \quad \textrm{ for } n \gt 1, \textrm{ with } C(1) = 0$$ to repeatedly rewrite $C(n)$ in terms of the same function, but with smaller and smaller inputs. Doing so reveals a pattern that can be exploited to find a closed-form expression for $C(n)$:

$$\begin{array}{rclc} C(n) &=& 2C(2^m/2) + 2^m & \quad \scriptsize{\textrm{given our assumption that $n=2^m$}}\\\\ &=& 2C(2^{m-1}) + 2^m & \quad \scriptsize{\textrm{now let's use the recursive relationship to rewrite $C(2^{m-1})$...}}\\\\ &=& 2[ 2 C(2^{m-2}) + 2^{m-1}] + 2^m & \quad \scriptsize{\textrm{we can of course do this multiple times...}}\\\\ &=& 2^2 C(2^{m-2}) + 2 \cdot 2^{m} &\\\\ &=& 2^2[ 2 C(2^{m-3}) + 2^{m-2}] + 2 \cdot 2^{m} &\\\\ &=& 2^3 C(2^{m-3}) + 3 \cdot 2^{m} & \quad \scriptsize{\textrm{by now the pattern should be clear...}}\\\\ &=& 2^k C(2^{m-k}) + k \cdot 2^{m} & \quad \scriptsize{\textrm{this holds for any integer $k$ up to $m$, thus...}}\\\\ &=& 2^m C(2^{m-m}) + m \cdot 2^{m} & \quad \scriptsize{\textrm{this holds when we consider $k=m$}}\\\\ &=& 2^m C(1) + m \cdot 2^{m} & \quad \scriptsize{\textrm{but recall the base case - $C(1)=0$...}}\\\\ &=& m \cdot 2^m & \quad \scriptsize{\textrm{now note that $n = 2^m$ implies $m = \log_2 n$...}}\\\\ &=& (\log_2 n) \cdot n\\\\ \end{array}$$Thus, the cost function $C(n)$ is again $O(n \ln n)$, as claimed.

QED.

We can also prove the running-time cost function $C(n)$ for a merge sort is $O(n \ln n)$, when $n = 2^m$ for some non-negative integer $m$, by appealing to the principle of mathematical induction.

First consider the base case when $m=0$. Note $2^0 \cdot \log_2 2^0 = 1 \cdot 0 = 0$. As this agrees with $C(1) = 0$, the base case is established.

Turning our attention to the inductive step, we suppose the result holds for some non-negative integer $m=k$. That is to say, we assume $C(2^k) = 2^k \log_2 2^k$ (the inductive hypothesis). We then try to argue that it must then also hold for $m=k+1$. In other words, we need to show that $C(2^{k+1}) = 2^{k+1} \log_2 2^{k+1}$. With this in mind, consider the following:

$$\begin{array}{rcll} C(2^{k+1}) &=& 2 \cdot C(2^k)+2^{k+1} & \scriptsize{\textrm{using the recursive definition of} C(n)}\\\\ &=& 2(2^k \log_2 2^k) + 2^{k+1} & \scriptsize{\textrm{using the inductive hypothesis to replace } C(2^k)}\\\\ &=& 2^{k+1} \log_2 2^k + 2^{k+1} & \\\\ &=& 2^{k+1} (\log_2 2^{k+1}/2) + 2^{k+1} & \\\\ &=& 2^{k+1} (\log_2 2^{k+1} - 1) + 2^{k+1} & \\\\ &=& 2^{k+1} \log_2 2^{k+1} - 2^{k+1} + 2^{k+1} & \\\\ &=& 2^{k+1} \log_2 2^{k+1} & \end{array}$$which is what we sought to show. Hence by the principle of mathematical induction, $C(2^m) = 2^m \log_2 2^m$ holds for all non-negative integers $m$. Recalling $n=2^m$, we have $C(n) = n \log_2 n$ which is again $O(n \ln n)$.

QED.

Let us remind ourselves again of the recursive definition of $C(n)$, when $n$ is a power of $2$:

$$C(n) = 2 C(n/2) + n \quad \textrm{ for } n \gt 1, \textrm{ with } C(1) = 0$$Suppose $n \gt 1$. Dividing both sides by $n$ yields $$\begin{array}{rcl} \displaystyle{\frac{C(n)}{n}} &=& \displaystyle{\frac{2 C(n/2)}{n} + 1}\\\\ &=& \displaystyle{\frac{C(n/2)}{n/2} + 1} \quad \quad \scriptsize{\textrm{now substitute } 2 C(n/4) + n/2 \textrm{ in place of } C(n/2)}\\\\ &=& \displaystyle{\frac{2 C(n/4) + n/2}{n/2} + 1}\\\\ &=& \displaystyle{\frac{C(n/4)}{n/4} + 1 + 1}\\\\ &=& \cdots\\\\ &=& \displaystyle{\frac{C(n/n)}{n/n} + \underbrace{1 + 1 + \cdots + 1}_{\log_2 n}} \quad \quad \textrm{ Then, recalling $C(n/n) = C(1) = 0$}\\\\ &=& \log_2 n \end{array}$$

Having shown $\displaystyle{\frac{C(n)}{n} = \log_2 n}$, we multiply both sides by $n$ to obtain the desired result $$C(n) = n \log_2 n$$

QED.